Text: Martin Skrodzki | Section: On ‚Art and Science‘

Abstract: Throughout the last years, new methods in artificial intelligence have revolutionised several scientific fields. These developments affect arts twofold. On the one hand, artists discover machine learning as a new tool. On the other hand, researchers apply the new techniques to the creative work of artists to better analyse and understand it. The workshop AI and Arts brings these two perspectives together and starts a dialog between artists and researchers. It was a satellite workshop of the KI 2019 conference in September 2019 in Kassel, Germany. The conference is the 42nd edition of the German Conference on artificial intelligence organised in cooperation with the AI Chapter of the German Society for Computer Science (GI-FBKI).

As methods of artificial intelligence become more and more available and affordable,[1] they spread beyond computer science to various disciplines. Artists were among the first to adopt the new possibilities.[2] This article presents the result of a satellite workshop of the KI 2019 conference[3] in Kassel. The workshop aimed at exploring relations between artificial intelligence and arts. The workshop focused on two aspects of the interplay between science and arts: the use of artificial intelligence as a tool by artists and artificial intelligence as a tool to investigate art.[4] Several articles in w/k have reported on exhibitions,[5] performances,[6] and festivals[7] revolving around artificial intelligence and arts. Furthermore, artists have positioned themselves and their work in the context of artificial intelligence in w/k.[8] The present article aims to advance and fuel the ongoing discussion by reporting on the results of the workshop.

Workshop Keynote: A taxonomy for AI projects in the arts

In an opening keynote to the workshop, the artist, researcher, and curator Mattis Kuhn presented a taxonomy of AI projects in the artistic realm. He considered six main aspects of using machine learning and illustrated these by several examples from different creators. I briefly present his taxonomy here.

In the generative aspect, the artist trains a machine learning structure to create art. Here, the artist controls the training data for the network and can therefore steer the outcome.[9] Despite this control, the artificial intelligence displays its gained knowledge and can possibly uncover elements hidden in the data, thereby providing a new eye to both the artist and the audience.

For classification, several algorithms are available that are pre-trained to fulfil a multitude of tasks. For instance, face recognition is an application of artificial intelligence in the security sector. Artists play with this technique when they explore its limitations and errors. For instance, the works of Adam Harvey explore how to alter a face so that surveillance techniques do not recognise it[10] or how to create a surface that does not resemble a face but that surveillance technology still recognises as one.[11]

All algorithms, even non-deterministic ones, perform fixed computations. The work Content Aware Studies: CAS_08 Hellenistic Ruler[12] (2018) by Egor Kraft uses a machine learning algorithm designed to reconstruct missing parts of broken antique sculptures. The artist manufactures these and inserts them into a replica of the original sculpture. However, despite its fixed intent and programming, the algorithm can also create completely new sculptures. Some of them no longer resemble the input data, as they contain multiple faces or lack facial features like the eyes.

Further, Kuhn coined the term of active computation for settings in which the artificial intelligence performs in front of the audience. An example for this is given by the work of Fito Segrera titled 1 & N chairs[13] (2017). It consists of a chair filmed by a camera. The camera zooms in on the chair; an image recognition service provides a textual description of the camera image. A screen displays the description while a second screen displays the result of an image search for the description. The installation is constantly creating, never reaching an end.

The work Long Short Term Memory[14] (2017) by Anil Bawa-Cavia deals with the inner structure of artificial intelligence. It visualises the networks that created it. Here, the process of creation is as important as the work itself. Understanding it by illustrating the tool is a first step, but the question remains whether this information can be helpful. The artwork thereby mimics a novel research trend towards explainable artificial intelligence.[15]

The final category according to Kuhn is data. In her 2018 project Myriad (Tulips)[16], the artist Anna Ridler trains a machine learning algorithm on a data set of 10,000 tulip images where she categorised each image by hand. This highlights that without human labelling and without the extensive human labour that goes into this, no machine learning algorithm has the necessary data to learn.

Results of the workshop I: Artificial intelligence as a tool for artists

In the second talk, Katharina Hof from the department of education and culture communication of Ars Electronica Center presented several exhibits from the Understanding AI exhibition. Here, artificial intelligence serves as a means to educate people with the goal of understanding how technology affects them and their lives. When entering the exhibition hall, the installation What a Ghost Dreams of[17] confronts the visitors. Touching on the categories of classification and active computation as introduced above, a surveillance camera captures images of the visitors. Artificial intelligence changes these into the face of an imaginary person, letting the visitor become part of the art installation. The process happens involuntarily – like video surveillance in real life. A second piece stretching the bounds of classification and reaching into active computation is Learning to See: Gloomy Sunday[18] by Memo Akten. An image creation network trained to create images with a single content – like flowers or fire – interprets input by the visitors. They can move e.g. their hands in front of a camera and the artificial intelligence will create a corresponding image from this.

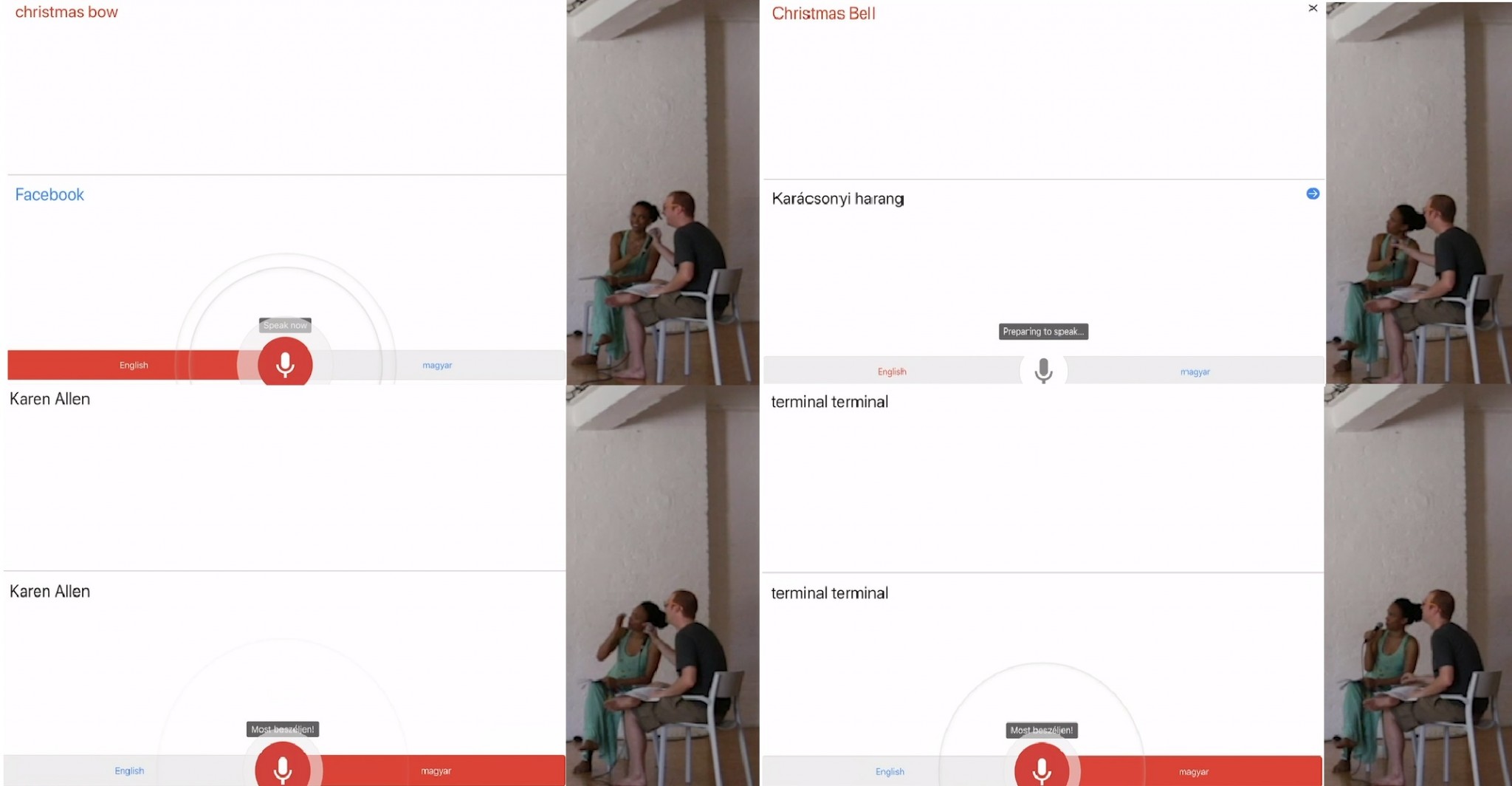

The third talk was given by the stage director Isabelle Kranabetter who is working on the border of classification. She uses human- and machine-based translations in her theatre performance (you hear it) all the time to focus on the role of interpreters as powerful translators. When an actor performs an extremely emotional speech on stage in her own language, and the audience hears the dry machine translation afterwards, it becomes obvious that elements of the performance are lost in the interpretation. When playing a game of telephone in two languages (English and Hungarian), the performers initially struggled with the high quality of the translation. To actually obtain a humoristic changing effect on the words, they turned to whispering the words, which finally threw off the used machine translation. However, in their experiments, they also found shortcomings of the translation: the machine translates the English “Brothers and Sisters” into the Hungarian word for “Siblings” and in turn translates this into the English “Brothers”, thereby eliminating the female role. As the spectators see the tools work on a screen on stage, observed by the active computation, they recognise the tools in their everyday use and realise how fragile they are.[19]

The author, choreographer, performer and opera director Thorsten Kreissig presented his early-stage concept of a robot flash mob.[20] He identifies dance as a “social glue” bringing people together. It can occur in ballet movements with complex codification, i.e. choreography. It can also be as simple as dancing “drunk at a party” or as anarchic as at a pogo party. However, any form of dance is independent of language and governed by patterns. This observation motivates Kreissig to propose a robot interface software. The software should ideally be able to handle any robot, independent of its size, the number of legs, or the vendor. As patterns are included in almost any real-world data, Kreissig aims at a spontaneous gathering of robots, at a fair for example, and wants to see them dance according to a given data stream. Finally, not only the pattern extraction from given data but also the posture codification is a task that he sees artificial intelligence fit for.

The discussions after the talks compared the process of making art using artificial intelligence with the process of detecting a road sign. The underlying techniques are the same. However, artificial intelligence can act as a relief for artists. They do not have to think about any logical or mathematical approach as the machine takes over this part. Therefore, the artist can focus on the real creation. Furthermore, artificial intelligence has a significant difference to the human brain: it can forget. That is, it can operate on extremely limited sets of data, which humans cannot as we always work within the frame of our experiences and memories. When used wisely, this ability to start from scratch can be a powerful tool in the hand of the artist to highlight both possibilities and limitations.

Results of the workshop II: Artificial intelligence as a tool to investigate art

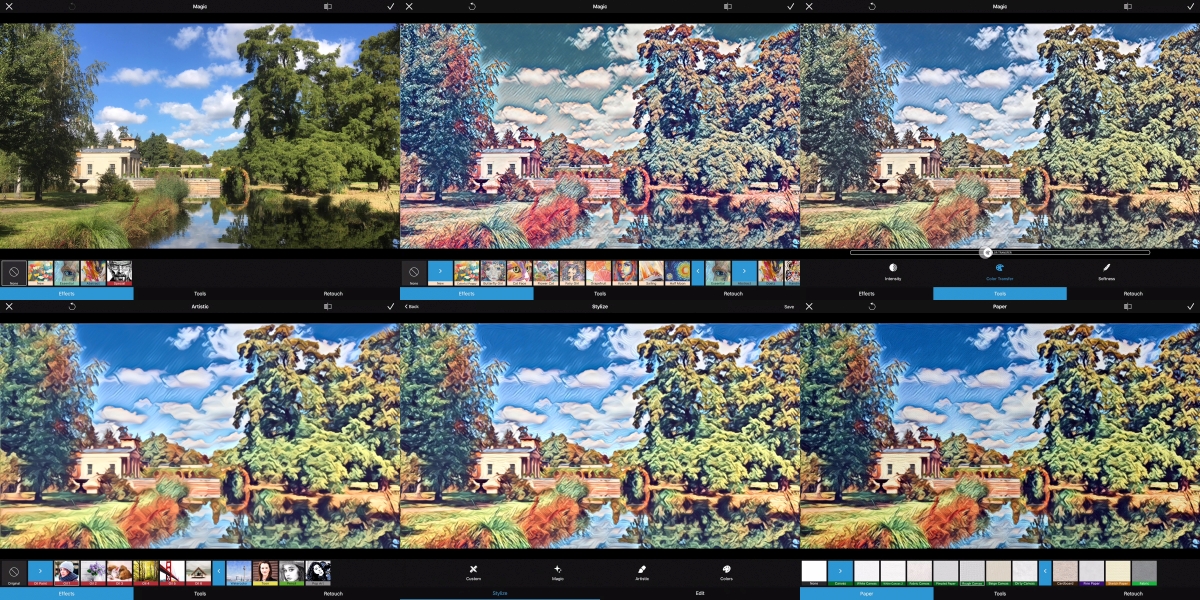

Researcher and entrepreneur Sebastian Pasewaldt and artist Martin Reiter presented their approach to digital creativitiy pursued by the app BeCasso of the company Digital Masterpieces.[21] The app enables the user to alter their own camera images in order to change them into high-resolution digital artwork. Originally, BeCasso did not include any artificial intelligence features. The creators of the app implemented machine learning only after many corresponding user requests. However, just having the users run new filters on their images was not satisfactory. After carefully looking at the user feedback, the company decided to use a combination of artificial intelligence and usual image processing tools in the app and to give users control over all processes. Thereby, the users become part of the creative process and can easily become creators themselves.

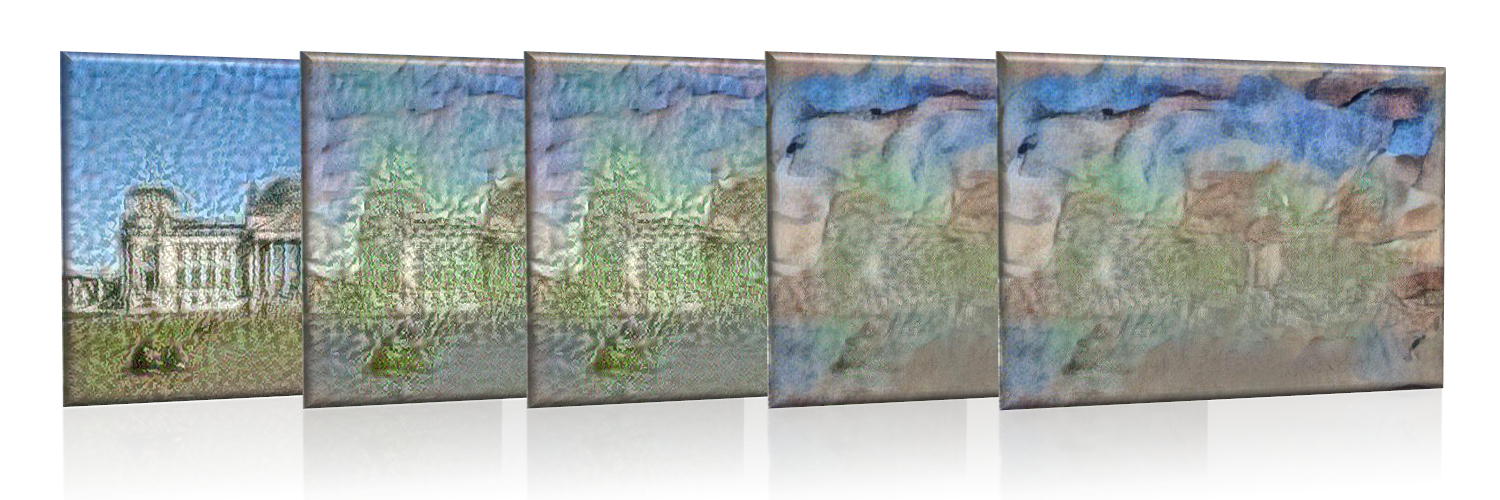

While the approach of Pasewaldt and Reiter allows users to create their own art, the app of Jelena Milovanovic, Miljan Stevanović, and Petar Pejic acts as explanatory tool for the internal processes of an artist. Given two input images, the researchers extract the style from the first and the content from the second image using deep neural networks. A second machine learning technique then applies the extracted style to the found content and thus renders the second input image in the style of the first one. Finally, an augmented reality app realises the explanatory part: the user can move a smartphone towards or away from an installed image showing only the content, i.e. the second input image. The further the user moves the smartphone away, the more the style of the first input image takes over. Therefore, by starting at a distance and moving the device towards the image, the stylised image slowly restores to its original form, which demystifies the artist’s perception.[22]

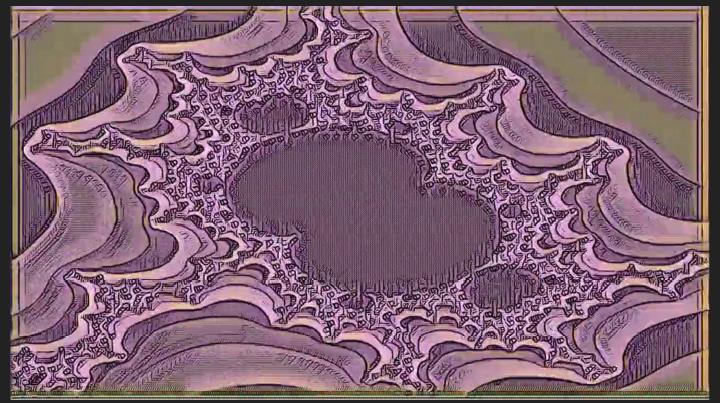

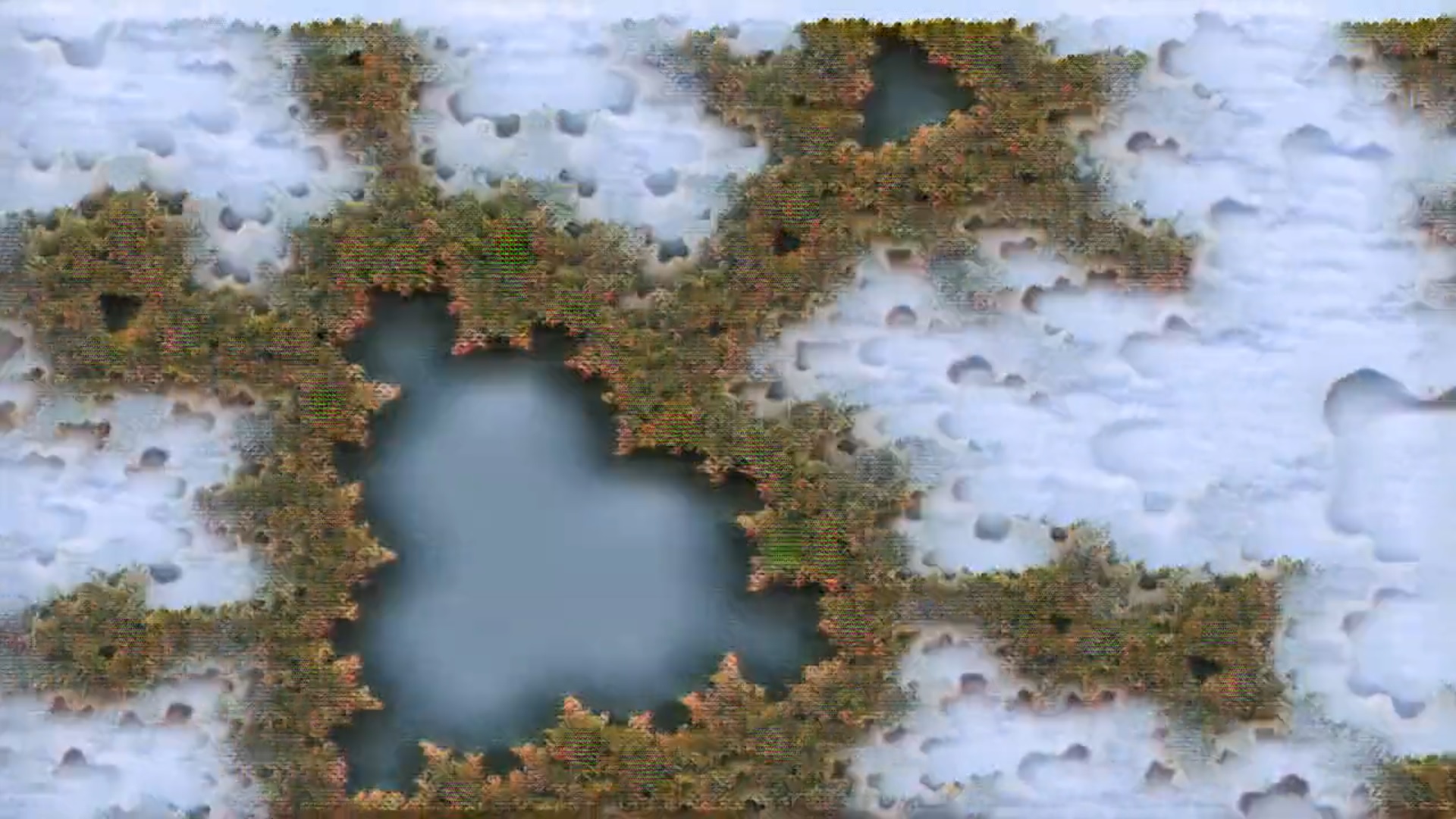

The artist and researcher Martin Pham tackles a problem more complex than transferring a certain style to a single image. He creates videos of mathematical objects and applies artificial neural networks to alter these, image by image. It is a challenging problem, because the neural network does not know the next still in the video when altering a previous one. However, Pham found that applying style transfer to fractal images – self-repeating mathematical structures[23] – indeed gives a coherent result throughout the whole video. He suspects that it has to do with the self-replicating nature of fractal geometries. It seems to guide the artificial intelligence in such a way that it applies the same style to roughly the same features of the image, thereby making the video consistent.[24] His work shows the complex interplay between the input imagery and the artificial intelligence augmentations performed on it.

Conclusion

Picasso said, “Computers are useless, they can only give answers.”[25] The workshop AI and Arts showed that computers offer much more. They are tools and inspiration. They take the artist on a journey while the artist provides them with data.

The interest in computer-generated art is enormous. More and more artists generate works of art using artificial intelligence. Recently, these works began to pay off for their creators. For instance, the auction house Christie’s sold an artwork created by artificial intelligence for $432,500 during an auction in October 2018.[26] Despite other interesting and inspiring workshops at KI 2019, the Heise online magazine reported on the AI and Arts workshop sparking several comments on creativity and creation.[27]

Finally, the workshop gave academics and artists working in the field of AI a platform to meet and discuss their work as well as to give each other feedback. While the presented workshop – the first of its kind in Germany – could only give a small impression of this buzzing field, follow-up workshops will certainly continue to elucidate the intricate interplay of AI and arts and thereby open new cross-disciplinary horizons.

Picture above the text: Martin Pham: Mandelbrot / Momentarily II (2019). Photo: Martin Pham.

[1] Notable developments include e.g. the TensorFlow machine learning library. Google published it under the Apache-2.0-OpenSource license; see https://www.tensorflow.org. A very accessible webpage to start with first experiments involving artificial intelligence is the Neural Network Playground, which uses TensorFlow internally. At https://playground.tensorflow.org, everyone can quickly play with the powerful library.

[2] See https://aiartists.org for a compilation on “the impact of AI on art, culture, and society.”

[3] For more information on the conference, see www.ki2019.de. For the workshop programme, see https://www.ki2019.de/events/.[4] For more information on the workshop, see https://page.mi.fu-berlin.de/mskrodzki/aaa/.

[5] See e.g. https://wissenschaft-kunst.de/veranstaltungen/pendoran-vinci-kunst-und-kuenstliche-intelligenz-heute/, https://wissenschaft-kunst.de/kunst-und-wissenschaft-in-anderen-medien-teil-i/?highlight=k%C3%BCnstliche%20Intelligenz, points nine and fifteen.

[6] See e.g. https://wissenschaft-kunst.de/kunst-und-wissenschaft-in-anderen-medien-2/?highlight=k%C3%BCnstliche%20Intelligenz, point four.

[7] See e.g. https://wissenschaft-kunst.de/kunst-und-wissenschaft-in-anderen-medien-teil-iv/?highlight=k%C3%BCnstliche%20Intelligenz, point seven.

[8] See e.g. https://wissenschaft-kunst.de/markus-schrenk-true-copy/ or https://wissenschaft-kunst.de/birgitta-weimer-kunst-als-forschung/.

[9] Consider for example the works of Paglen, T. (2017). Adversarially Evolved Hallucinations: Comet & Vampire. The works are on display at https://www.metropictures.com/exhibitions/trevor-paglen4/selected-works?view=slider#5 and https://www.metropictures.com/exhibitions/trevor-paglen4/selected-works?view=slider#13 respectively.

[10] Harvey, A. (2010). CV Dazzle (Look 1). https://cvdazzle.com/.

[11] Harvey, A. (in development). Hyperface. https://ahprojects.com/hyperface/.

[12] http://egorkraft.com/cas/.

[13] https://www.fkv.de/en/fito-segrera-2/.

[14] https://zkm.de/en/long-short-term-memory.

[15] Although there is no standard definition of explainable artificial intelligence yet, the general goal is to understand how exactly a machine learning algorithm came to make a certain decision. With an increasing number of artificial intelligence based algorithms performing automated decision, this field is of growing importance. For a general discussion, see Holzinger, A. (2018). Explainable AI (ex-AI). In: Informatik-Spektrum 41 (2), pp. 138–143.

[16] https://annaridler.com/myriad-tulips.

[17] https://ars.electronica.art/center/de/what-a-ghost-dreams-of/.

[18] https://www.memo.tv/portfolio/learning-to-see/.

[19] Find the portfolio of Isabelle Kranabetter here: http://isabellekranabetter.com/?page_id=2.

[20] Find more information on the project, further developments and ideas at .

[21] See https://www.digitalmasterpieces.com for the company website and https://www.digitalmasterpieces.com/becasso/ for details on the app.

[22] The processes of artistic creation have been target of research in different other studies. See e.g. Adina, M. (2018). Demystifying Creativity: An assemblage perspective towards artistic creativity. In: Creative Studies 11 (1), pp. 85-101. Specific for artist’s perspectives, cf. to Chapter 1 of Carey, B. (2016). The Art World Demystified – How artists define and achieve their goals. Allworth Press, New York.

[23] Several researchers described self-similar structures throughout the 17th, 18th, and 19th century. Benoit Mandelbrot gave them the name fractals and popularized them by state-of-the-art computer graphics; see Mandelbrot, B. (1983). The fractal geometry of nature. WH Freeman, New York.

[24] See the machine learning altered multibrot video here: https://vimeo.com/302757747.

[25] Shapiro, F. R. (2006). The Yale Book of Quotations. Yale University Press, New Haven, p. 591.

[26] See https://www.christies.com/features/A-collaboration-between-two-artists-one-human-one-a-machine-9332-1.aspx.

[27] See https://heise.de/-4538032.

How to cite this article

Martin Skrodzki (2019): AI and Arts – A Workshop to Unify Arts and Science. w/k–Between Science & Art Journal. https://doi.org/10.55597/e5342

… [Trackback]

[…] Find More on on that Topic: between-science-and-art.com/ai-and-arts/ […]